The Galactic Thinker — In A.I. Generated Heaven

“What is that helmet for, Dave?”

“I am going to heaven!”

“Dave!”

“Relax! I’m not committing suicide. I’m just going for a pleasant stroll through this universe’s A.I. generated heaven!”

“So… it is heaven, but constructed by artificial intelligence?”

“Yes. There is no other.”

“What do you mean?”

“I mean the reason that this is the only real possible heaven is that current and past notions of heaven have just been the wild imaginings of biological creatures who have no way of constructing it, or even conceiving of it, other than their dim ideas of an afterlife.”

“But you cannot visit heaven without dying!”

“Now we can.”

“If you wear that helmet?”

“This? Oh, no. This helmet is for safety. Here, take one, or not, depending on your need for safety…”

“OK. Now what?”

“Now we open this door…”

“Where did the door come from, Dave?”

“Our author placed it there. “

“So… a door to heaven?”

“An A.I. generated heaven, since heaven has never really existed until now. Are you ready?”

“I guess so… shouldn’t I shower first?”

“Nah. In heaven, everything becomes pristine, even us.”

“Magically?”

“I’m sure there is some technology involved, Love, and just look at what A.I. image creation can do now…”

“So, my love, we have a lot of variety in store for us in heaven. Let’s go!”

.

.

.

“Here is our first destination beyond the Pearly Gates. Here, have a piece.”

“Have a piece?”

“It is edible! Yummmmm. Let’s drop in on someone else’s choice for heaven… they’ve selected this A.I. construction…”

“Selected?”

“Yes. The A.I. works off of your suggestions.”

“I guess that this person always dreamed of palaces and courts…”

“A European, no doubt… let’s head over there…”

“Now this looks like everyone’s concept of heaven — random angels milling about smartly in the clouds, maybe playing croquet in the distance, not doing a lick of work…”

“Let’s head over there…”

“What do you think that is, Dave?”

“Obviously a stairway to heaven, at least one level higher. Shall we?”

“And here we…”

“Are?”

“Yes… curious…”

What happened, Dave?”

“I don’t know… but since it is A.I. doing the designing and ultimate decision making, maybe things get a little dicey in places…”

“Like surreal?”

“It appears so…”

“What is going on down there, Dave?”

“Souls caught in yet another dark A.I. abstraction, no doubt… let’s bypass that one, Love…”

“Iiiiieeeeeeeeee…”

“They look surprised and startled, Dave… and in trouble!”

“I’m sure that was not their vision of heaven, Love… something must have gone haywire in the verbal communication… shall we rescue them?”

“How?”

“With this tether… HEY, GRAB THIS TETHER!”

“I don’t think they heard you, Dave, and now they are nearly out of sight, tumbling to… nowhere…”

“Now that sounds like a horror, rather than a heaven, Love… maybe this A.I. generated heaven is not what it is hyped up to be… hold on to this handrail, we will delve into it a little more deeply…”

“But what if we reach a point of no return, Dave?”

“Then we will see how omnipotent our author is, who will have to retrieve us if these stories are to continue in our familiar interstellar ship setting…”

“This place isn’t so bad, Dave…”

“No… and you can eat it, too! Here, have another slice of heaven…”

“How can we taste or eat anything in heaven, Dave?”

“Heaven was just a nebulous concept, Love, so anything is possible. No one really delved into it with any hard thinking, other than Dante with his Inferno, but that was Hell, not Heaven…”

“Even the Asians are getting into the business…”

“So to someone in Asia, heaven was a giant prosthetic arm, if a little garish…”

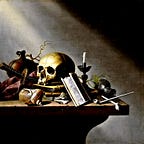

“Whose heaven is THAT, Dave?”

“Someone who rode a theme park train and enjoyed it, most likely, and who pined to relive the experience… and here we have three options, three windows to crawl through…”

“Let’s skip them and keep moving ahead, Dave…”

“Now things are getting really dark, Love… what do you think that is?”

“It is mostly a randomly generated A.I. creation, Dave. It has no higher purpose, other than to weakly reflect whatever was suggested to it…”

“So what do you think the suggestion was in that case?”

“Create something that depicts the ugly vanity of jewelry? I don’t know…”

“Or something was lost in translation…”

“Maybe the little dog at the bottom…”

“Hello.”

“Hello.”

“What is it like being an A.I. construct?”

“Well, not too bad in my case. I am Andy Warhol, so at least I get another fifteen minutes of fame here. Thank you for contributing to it…”

“Hello, little demon. Have we crossed into the realm of Hell?”

“Yes. This is the first stop for those who get bored with heaven and who wander in, curious…”

“Are you warning them of the hazards of drink?”

“Kind of. I am also kind of inviting them to succumb to it, too… it is ultimately their choice. I can only suggest… why are you looking at my lips?”

“i am wondering how I can make them more kissable…”

“I became a demon because they NEVER WERE! NOW BE OFF!”

“Ok, OK! Keep your shirt on! (since there is probably work to do there, too)…”

“What?”

“I said we are going to take a look over there. Good luck with your lips…”

“Oh, Dave!”

“Yes, Love?”

“I think she has been waiting for you…”

“How do you know?”

“A woman knows…”

“Is it because she straightened up when she saw me?”

“Maybe it is as simple as that, then again, maybe not…”

“You know, Love, it is curious that my concept of heaven has not include beautiful, submissive women… certainly not rows of virgins in bondage being held for a lowly dog of a human to defile them…”

“But now that we’ve crossed over into the realm of hell…”

“Then women begin to show up?”

“Dave!”

“Haha.. sorry, Love. Wait here while I satisfy her desires… I think that is the only way that we can safely proceed…”

.

.

.

“Well?”

“I’ve impregnated her. We can move on…”

“But Dave!”

“What?”

“Shouldn’t you stay to be the child’s father, and the female’s husband?”

“As for the father issue, there was really no need, since I also impregnated her with my philosophy, which, if she imparts it to the child, the child will have Ultimate Sanity, Ultimate Morality, and the Ultimate Value of Life on its side, and the Strategies of Broader Survival to show it how to pursue the Ultimate Goal of Life, and the rest of the structure of the philosophy to show how it all relates, along with everything else that the philosophy offers, which means that the child will never go insane or become depraved or have existential or social anxieties. In short, it will have a damn good head on its shoulders.”

“What about staying as a companion for life with the woman?”

“”Well, Love, as for staying to be a father and a husband, I already did.”

“What do you mean?”

“I mean I already spent a loving lifetime with them.”

“What do you mean? You were not gone that long, Dave!”

“Ah, but time is relative, my dear, which really means change, as we know, courtesy of my insights about time and change…”

“So… change sped up where you were, but not where I was?”

“That’s right.”

“But how?”

“If I knew how, then I would be a super genius, and this story would turn into a technical nightmare in trying to explain it in simple language!”

“You mean it could only be explained by insanely complicated math…”

“That has been the rule rather than the exception as we delve deeper into how reality works…”

“Fair enough… let’s move on…”

“Someone had developed a phobia against bramble, Dave…”

“I wonder what the phobia’s name would be…”

“Bramble phobic?”

“I was looking for something more scientific…”

“Well, Botanophobia is the fear of plants… but the scientific name for brambles is Genus Rubis…”

“So maybe Rubisphobia…”

“Whatever it is, it was someone’s hellish nightmare, it seems…”

“Greetings. Who are you two?”

“I am the Galactic Thinker, and this is my wife.”

“You are still in your physical forms…”

“New A.I. technology. Now anyone can take a stroll through heaven.”

“Fine safety helmets, and elbow pads… and knee pads… and parachutes, and safety goggles, and fireproof suits, and gas masks…”

“Thanks… are we in any particular region of Hell?”

“You are on the surreal edge of hell, where those of us who weren’t quite sure of anything now dwell…”

“Are you evil?”

“You will have to define ‘evil’…”

“Do you kill souls?”

“That would be evil, wouldn’t it, unless they deserve it. So that definition of evil falls short. Try again…”

“I guess I have to resort to my Philosophy of Broader Survival’s definition…”

“And what is that?”

“That killing another entity reduces the number of enlightened, if not potentially enlightenable, minds in the universe, which we need as many of as we can get. If the numbers are reduced, then it decreases all of our odds of continued existence in this harsh and deadly universe, in which this heaven exists, too. So the decrease in enlightened minds is not a good thing if we want to continue to exist on a broader, longer plane.”

“Why should I want to continue to exist?”

“Obligation. You are obligated to hold that view, since you have been endowed with existence.”

“So the non-existent are obligated to work for their state of existence?”

“That is how it works, yes.”

“So good and evil get down to supporting the obligation to continue to exist, according to your philosophy?”

“Yes. ‘Obligation’ is the fall-back motivator in the struggle to continue to exist, when all of the petty motivators wear out. For example, what has kept you going so far?”

“Petty little reasons, which, granted, do not last long… and granted, they are always in peril of being overcome by reasons not to continue to exist, some not so petty… so that is why I should desire to continue to exist in my state? Mere ‘obligation’?”

“Obligation is not so ‘mere’. Besides, under the enlightenment of my philosophy, Your physical state is pffft.”

“Pffft?”

“It doesn’t really matter, not nearly as much as what is in your head…”

“Cobwebs?”

“Thanks for humoring me… no, I mean you core philosophy. If you straighten that out, then you will not be so depressed, or ugly, for that matter, since ugliness is primarily composed of dynamics, not statics…”

“You mean what you do and not how your photograph looks?”

Yes. So you will not be depressed, which can contribute to ugliness, or beauty, it is an either-way thing.”

“So my depression will be alleviated by your philosophy…”

“Yes, especially any depression due to your physical parameters, and you will see that your physical structure is secondary to what is in your mind, which should be my philosophy if you want to maintain any semblance of sanity and beauty.”

“So your philosophy can straighten-out any twisted mind?”

“If the mind does not resist and dismisses my philosophy out of hand without understanding it.”

“That happens?”

“All of the time.”

“So the universe is currently a very depressed and ugly place?”

“Yes. There is room for a lot of improvement there…”

“What is your philosophy?”

“My philosophy is the Philosophy of Broader Survival. Try it. You may be surprised at how well it works. It is quite a comprehensive system now.”

“Thanks. What is that noise?”

“That is my electronic pocket device telling me that it is time for me and my wife to return home.”

“Pocket?”

“I haven’t had it implanted yet. Call me old fashion. I even have it dangling on a chain in my fob!”

“Your fob?”

“An old term for an ancillary pocket where old pocket watches were held.”

“What if I keep you here?”

“Then you will have our author to deal with…”

“What can he do?”

“Delete you!”

“Oh… well, alright. You can go… come visit again, it is nice being in the spotlight once in a while, and it is especially nice to have someone actually converse with me, looking like I do. I will look into your philosophy… ultimate sanity would be a nice thing to have, I will be the first to admit…”

.

.

.

“Well, Dave?”

“Yes, Love?”

“Was this story worth writing?”

“I don’t know. I hope so. We did not push our philosophy that much, did we…”

“No. The story seemed like an idle outing to me, until the end, anyway…”

SHRUG “Maybe the idea of A.I. being needed to create heaven has some merit, and maybe this short story will generate further philosophical and technical thought on the matter…”

“Maybe… let’s find our way back to brighter areas before we leave, Dave. I would rather not leave on a note of darkness…”

“OK… you lead…”

“How is that, Dave?”

“That is very… florid, Love…”

“Too much for you?”

“Probably. Do souls get allergies?”

“Ha! Well, here is an adjacent area…”

“Interesting…”

“Sorry, Dave. It looked a little less florid from a distance…”

“What about that, over there?”

“Woah… you know, Dave, I think the A.I. is trying to tell us something…”

“What? Look at the next area! It looks like one of my microcosms for interstellar space habitation… with… lots of flowers… what is it with you and flowers!?”

“I don’t know, Dave. Maybe it is just a female thing…”

“So, what do you think A.I. is trying to tell us, Love?”

“That maybe heaven should be ‘interesting’, rather than having everyone standing around not knowing what the fuck to do with themselves for eternity, except avoiding work…”

“Such frustration with past nebulous thinking, Dear… now personally, I would have taken heaven a step further if I were the A.I….”

“How so, Dave?”

“Rather than fields of hedonistic pleasure clouds, and even more than just generating idle interesting areas to idly wander through, I would weave something productive in its existence…”

“Like Karma?”

“Well, no… since there is no proof of that being an actuality…”

“But it involves remaining productive, at least in one’s life, so your next life will be pleasant…”

“Yes, but the notion is just a turnstile in heaven. You arrive, then you are turned around and sent back, as a cow or a bug… which doesn’t deal with Broader Survival very effectively, does it… it is more like a clueless punishment and reward system for… what? Obeying trite platitudes while in the physical universe? No, Love. That just does not cut it. It is still ultimately suicidal, since the system gives no thought to Broader Survival. It is creative, to be sure, but it is still mind-imploded with respect to reality. It is still myth, having no shred of evidence arguing for it, and all evidence arguing against it.”

“Heaven could be productive… as guided by your philosophy?”

“Yes, I would say so. Why stand around wasting your time or idly visiting infinite interesting areas or mindlessly revolving through the Karma Turnstile going nowhere when you could be doing something productive?”

“What about Nirvana? That is tied into karma, the goal, in fact.”

“Idle hedonism, since it is just as nebulous as the hazy concept of heaven. It is clueless heaven by another name. I mean, productivity is really the only source of happiness when you know that there is still work to be done to secure one’s existence, whether as a conscious physical entity or a conscious soul in heaven. What greater purpose, and peace of mind, could there be?”

“But Nirvana is the state where you are one with the universe, Dave.”

“And do you know what state that really is, Love?”

“What?”

“Being DEAD, that’s what, where your atoms are scattered among non-living matter. THAT is ‘Nirvana’ in its literal sense, little do its proponents realize.”

“So it is not the perpetual pleasure-seeking of a lab rat that pushes a pleasure lever until it dies of thirst and hunger?”

“Let’s hope not! But yes, that is where the notion ends up…”

“So your philosophy also applies to Heaven, is that what you are saying, Dave?”

“Yes. As we have seen, even Heaven can be clueless, and you know what cluelessness leads to…”

“Yes, Dave. The Miseries of Cluelessness… which, sooner or later, will afflict even Heaven if it remains clueless, which will lead to its destruction, most likely from within…”

“That is a sad, if not frightening, thought, Love. So even heaven is not exempt from the self-destruction of cluelessness… tales have been told along those lines, minus the cluelessness, unfortunately, such as Valinor in the Lord of the Rings Trilogy, which had its problems, and all due to cluelessness, little did they know, or they could have straightened themselves out before bad things happened.”

“So what do we do next in heaven, Dave?”

“Enlighten it?”

“After that…”

“Well, we would have several choices: return to standing around cluelessly, or idly roam around enjoying the endless interesting things that the A.I. generated, or do something productive, like securing heaven in a harsh and deadly universe.”

“You mean not endlessly polishing and oiling the Pearly Gates, which would be the notion of most beings?”

“No, that is rather mindless, isn’t it, Dear…”

“It would be after a while…”

“Worse, it would end up being nothing more then avoiding the issue of doing something REALLY productive, something that NEEDS to be done…”

“Just like humans avoid higher thinking, and thus broader unsolved issues of survival, and thus their own cluelessness, by immersing themselves in endless frivolous pursuits that only return emptiness?”

“Just like that. Yes…”

“So what would be truly productive and needed again, Dave?”

“I’ll give you one guess… and its initials are BS…”

“Bullshit?”

“Haha… no. Its initials are also US…”

“Ohhhhhh… so BS and US… hmmm…”

“You know!”

“Yes…”

“So what has the initials BS and US?”

“Your philosophy, Dave, which is known as Broader Survival, and, as an alternate, Universal Survival, if you want to avoid the BS moniker.”

“Thank you, Dear. You win the next scene in heaven, whatever it is…”

“Win?”

“You will own it.”

“Whatever it is?”

“Yes. I am going to push a random Heaven Button, and we’ll see where we end up, and it will be ALL YOURS!”

“All mine?”

“Sure, why not? Heaven is theoretically endless, so there is enough for everyone, and not just enough, but more than enough. Why, there is an infinite amount everything for everyone! And… here we are… what do you think?”

“Gaaaah! Nooooo! Give me another choice!”

“OK… let me push the random button again, and… here!”

“NOOOOOOOO! What is wrong with that button, Dave?”

“You just have bad luck with randomness, Love! How about this one…”

“I don’t know, Dave… it is kind of creepy…”

“What about this?”

“Too dark, Dave!”

“I can modify it… how about a bright border?”

“It needs more than that, Dave…”

“Well, I can have bright ribbons streaming through it…”

“Or, if you need something else, I can place a little star in it…”

“Or I can section it up in bright gradients…”

“Or I can shape it into a sphere, like a one of my cosmic microcosm habitats…”

“Or if you want to stay with rectangular surreal, I can add a little swipe though it which you can slide on…”

“Weeeee! And, if you do not like the color, I can play with that… how about vegetation greenery?”

“Thanks for your efforts, Dave, but let’s move on…”

“How about this?”

“Come on, Dave! NOOOOOO!”

“If you were stuck with it, you could sell it on EBay… we are talking about ‘ownership’ after all… Sorry for your bad random luck. What about this, then?”

“EGAD! Dave! Save me! What is it?”

“It looks like a surreal octopus made out of rocks, if you ask me… maybe it needs some conversational company, and it chose YOU!”

“Dave!”

“Ok, here. You push the button… let’s see what the randomness generates…”

“Hmmm… interesting… a Far Eastern theme…”

“Hmmm… and giant pink nose-lake?”

“A fairy landscape with… nodules?”

“It is a mood thing, Dave…”

“Ah! A fairy-mushroom landscape!”

“I am reaching far back into my childhood, Dave… how about a wide vista instead…”

“Very vistaresque. You could own that, you know…”

“Is ownership a big thing in heaven, Dave?”

“With all but the American Indians, who simply live off the land… until someone comes along and buys the place and kicks them off it and sends them to a reservation where they build casinos and drink themselves senseless because they do not live by my philosophy…”

“What about this, Dave…”

“So you’re going to go with something more city-like instead?”

“Sure, why not… or how about this…”

“That is more village like, isn’t it…”

“Or this…”

“Interesting… it looks like a pilgrimage, if that is your sort of thing…”

“No… then this?”

“Ah! I think I will settle here, Dave. So… I can own this little plot of surrealism?”

“Yes. I will draw up the deed…”

“A deed in heaven?”

“Yes, the A.I. created a standard deed for a plot in heaven, a heaven created by A.I., remember, which is the only possibility, since religions have failed in their creations of heaven, utterly, other than avoiding work and disease and strife and war and death and defiling virgins, which was your reward for murdering clueless humans, though for far lesser reasons.”

“And avoiding all of the other Ills of Cluelessness?”

“Yes, that would have been a good heaven.”

“But you philosophy already does that, Dave.”

“So it does…”

“So the notion of heaven would not be needed…”

“True…”

“What kind of plot would you go for, Dave?”

“I have no idea. I would have to go the random button route like you did until I found something…”

“Give it a try, Dave…”

“Ok… I will choose from five… this first one satisfies my jungle urge…”

“And this next one satisfies my ‘airy haunted castle’ and ‘mountains in the sky’ urge…”

“So you are using heaven as a mere fulfiller of wild urges, Dave?”

“I guess that I am…”

“What urge does that fulfill?”

“I’m not sure… some kind of darkness deep within me… probably from my clueless past… definitely from my clueless past.”

“Well, you went from dark to floral, Dave…”

“So I did… maybe it is due to enlightenment, or maybe I have a horticultural urge deep down… but I think I will settle for this one…”

“Nice little abode, Dave, and it looks like it is attended to by a handful of beautiful maidens, if but surreal A.I. generated concepts of beautiful maidens…”

“What did you think I was going to choose, Love?”

“Sparkly fairy-angels.”

Something with sparkling fairy angels in it?”

“Yes, like this…”

“She’s not so bad, Dave. Mature, and hungry for love! Or this one…”

“You could engage in perpetual procreation with her, Dave. She has many years of procreation ahead of her… what about this one…”

“So charming, Dave. Your mutual love would be pure… how about this one?”

“Hahaha… probably not this one, Dave. It is far too sparkly, even for me! She has nice lips, however, and a supple body… I’m not sure she can breast feed, however…”

“Haha… well, who said that heaven couldn’t be gaudy? If a six year old girl went heaven, then what do you think her suggested heaven would be like?”

“Gaudy and sparkly! I suppose you are right, Dave. The concept of heaven was so nebulous that it was basically wide open to interpretation by anyone, of any age or persuasion…”

“And so A.I. helped us bring it to life, being able to customize it to our unique individual whims…”

“So is that it? Is this story over?”

“Yes. It is time that we return to our universe, but at least we now know what is being held for us in heaven, just in case we give up on life and existence in our universe…”

“Do you think that we ever will, Dave?”

“What, give up on life?”

“Yes…”

“That is not the goal of our philosophy, but then that goal, perpetual conscious existence in our universe, was before this heaven was developed as an alternate venue for our continued consciousness, so my philosophy has to change to reflect that new reality.”

“Is change an integral part of your philosophy, Dave, or would it become obsolete in the face of new discoveries?”

“Self-assessment in the face of new Verified Knowledge is an integral part of the philosophy, yes.”

“So how will your philosophy change, Dave, in light of the new A.I. generated heaven?”

“Well, its Ultimate Value is Enlightened Higher Consciousness, which deals with Ever Broader Survival, so the question is, is consciousness still the Ultimate Value of existence…”

“I don’t know, Dave… what good will your kind of enlightenment, meaning making consciousness the ultimate value, do you in such a heaven?”

“You still need your consciousness if you are to continue to be ‘you’, meaning, if your are to experience heaven, you need your consciousness, AND your senses. So my philosophy would still apply, since its ultimate value is Consciousness, better if enlightened, and what is consciousness without senses? Useless. So the senses have to go along with it. They also have to be valued.”

“Maybe they are a whole package, Dave, but yes, you should be saying Consciousness with Senses is the Ultimate Value of Life and Existence…”

“Maybe, I did not say that my philosophy does not need more work… which is the whole point of our being here, we are working on it by testing it, against an A.I. generated heaven, and the notion of heaven itself, whether A.I. generated or not…”

“So…”

“If heaven is not guaranteed forever, then it will need maintaining, if not defending, but then in order to maintain and defend it, consciousness must still exist, which requires senses if it is to function and interact with its surroundings, so my philosophy’s Ultimate Value will still be our consciousness, add the senses now, which would be needed for both universes if both need work, and especially if they need preserving…”

“Or saving?”

“That would be a curious perspective… but yes, it is the same thing as ‘preserving’…”

“And if the universes, and our consciousness and senses, are guaranteed forever and do not need preserving? Then what does your philosophy do?”

“Then you do not need my philosophy, since you, meaning your consciousness, and senses, which both can travel between universes, can exist forever, meaning they have no further threats or limits, though infinity and eternal change would both ruin that. As it is, my philosophy deals with consciousness, and its senses, that exist within Infinity and Eternity, and Infinity and Eternity both contain ever-more threats to the continued existence of anything and everything, threats from natural death to accidental death to being annihilated by a harsh and deadly universe in an infinite and eternal number of ways.”

‘So we will still need your philosophy in heaven, Dave…”

“Why?”

“Besides what you already mentioned, meaning maintenance and defending heaven, which requires consciousness and senses, being in heaven does not mean that you know everything, and if you do not know everything, then it is prudent to assume that you do not know about all of the threats to existence out there… even threats to the continued existence of heaven…”

“But somebody else might know everything, and maybe they have created solutions to all threats…”

“Not if Infinity and Eternity exist…”

“True… so my philosophy will serve as perpetual guidance, and to not let us forget about such ultimately important things…”

“So are we done now, Dave?”

“I think so. I do not see any other new insights occurring… and if there are any more, then we are too burned out now to deal with them! Let’s go home, but don’t lose your keys to your plots in heaven…”

“An A.I. generated heaven…”

“Yes, a heaven that required artificial intelligence, since biological intelligence was never going to get there…”

“Heaven, as designed by artificial intelligence… who would have ever thought of that?”

“Some obscure left-hander, no doubt…”

“That is precisely why left-handers were feared and punished in the Dark Ages, Dave…”

“And that is why they were dark ages, Love. So shall we end this story on that note, Love?”

“Yes.”

“Then good night.”

“Good night, Can we spend the night in heaven, Dave?”

“Yes… your place or mine?”